The AI Boom Was Built, Not Born: A 15-Year Journey from Research to Agents

By: Mohammed Moussa

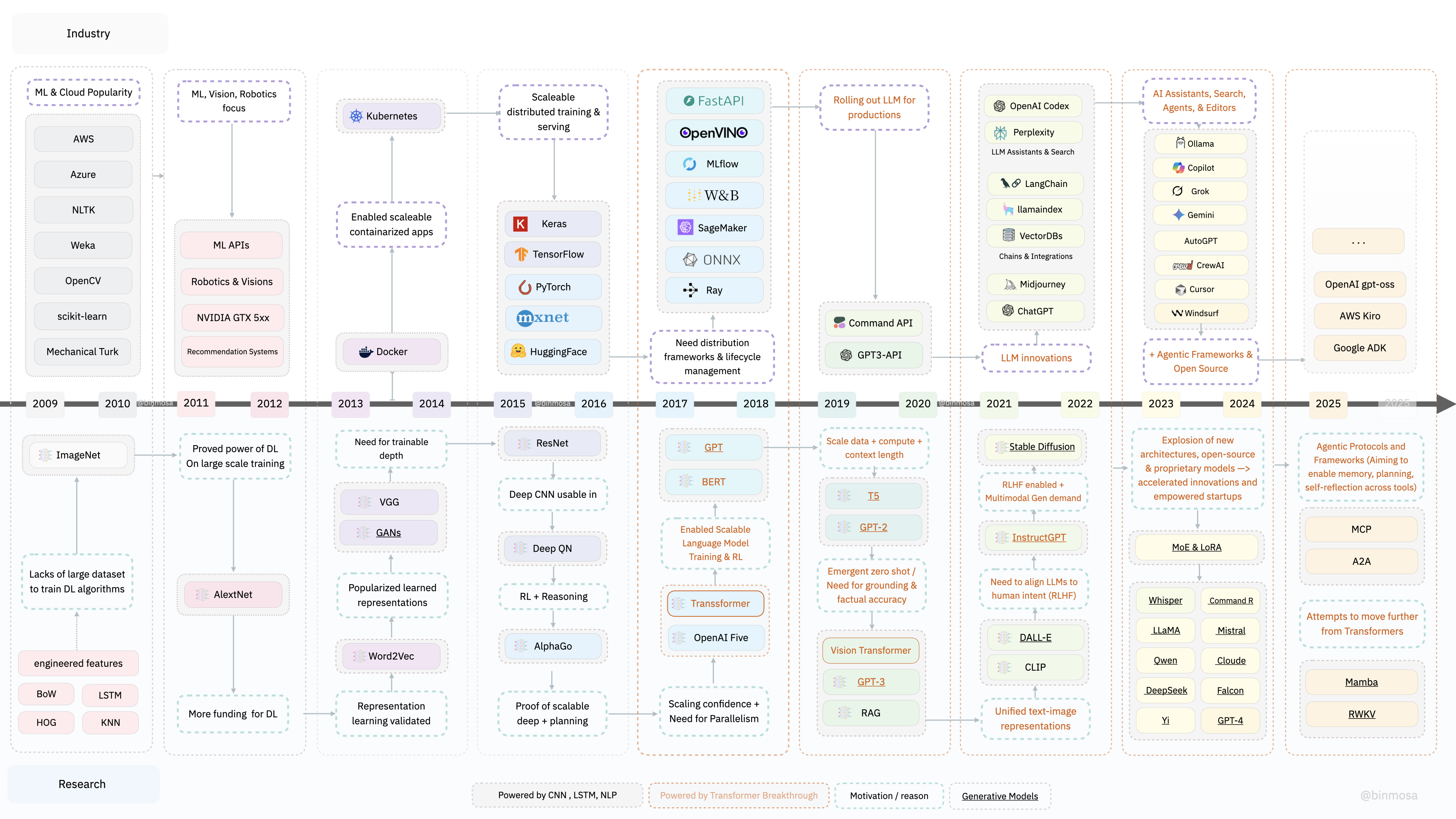

📜 The AI Boom Was Built, Not Born: A 15-Year Journey from Research to Agents

How did we go from handcrafted features and CNNs to autonomous agents like AutoGPT and emerging protocols like MCP?

Spoiler: It didn’t happen overnight.

In the last 18 months, the world has been amazed — even overwhelmed — by the pace of advancement in AI. ChatGPT, Midjourney, LLaMA, AutoGPT, Claude, and Gemini dominate headlines.

But if you zoom out, what we’re seeing today is not a sudden leap — it’s the compound result of over 15 years of innovation across research, infrastructure, tooling, and application design.

So I decided to map it out.

Here’s the full story behind the visual timeline — tracing the evolution of AI from early deep learning to the emerging world of agentic intelligence.

🧠 2009–2012: The Deep Learning Awakening

In 2009, machine learning was already in play — but limited. Models relied on carefully engineered features like:

- Bag-of-Words (BoW)

- LSTMs

- HOG descriptors

- K-Nearest Neighbors (KNN)

There was no large-scale dataset to test deep learning ideas at scale.

Then came ImageNet — and in 2012, AlexNet shattered benchmarks.

This moment:

- Revived faith in deep learning

- Popularized the ReLU activation function

- Brought new funding and momentum into DL research

📈 2013–2016: Representation Learning & Early RL

With momentum growing, the focus shifted to learning better representations:

- Word2Vec enabled semantic embeddings

- GANs introduced generative capabilities

- VGG pushed CNN depth

- AlphaGo and Deep Q Networks proved deep reinforcement learning could master games and planning

Meanwhile, Docker and Kubernetes emerged — laying the groundwork for reproducible ML experimentation and scalable deployment.

🧠 2017–2020: The Transformer Breakthrough

This was the true inflection point.

In 2017, the paper “Attention is All You Need” introduced the Transformer — an architecture that reshaped the future of AI.

Everything that followed traces back to this:

- BERT for masked language modeling

- GPT-2 and GPT-3 for generative fluency

- T5 for text-to-text tasks

- RAG for retrieval-augmented grounding

It also triggered an infrastructure boom:

- HuggingFace made models accessible

- Ray, FastAPI, MLflow enabled scalable training and serving

🎨 2021–2022: Multimodal & Aligned Models

LLMs were powerful — but raw. This phase focused on alignment and creativity.

- InstructGPT used RLHF (Reinforcement Learning from Human Feedback) to align models with human intent

- Codex enabled natural language to code → GitHub Copilot

- CLIP combined vision + text embeddings

- DALL·E and Stable Diffusion made AI-generated art mainstream

This is where AI became not just intelligent — but expressive.

⚙️ 2023: The LLM App Layer Explosion

LLMs hit the real world — and the ecosystem exploded:

- LangChain unlocked tool use and memory

- VectorDBs (like Pinecone, Weaviate) powered search + context

- AutoGPT demonstrated autonomous task execution

- Cursor, Perplexity, Midjourney, Grok, Gemini brought AI to everyday users

Meanwhile, open-source models like LLaMA, Mistral, Claude, and Yi became powerful and accessible.

LLMs became platforms.

🤖 2024: From Tools to Agents

The next leap: autonomous AI agents.

These aren’t just chatbots — they plan, reason, and take action:

- CrewAI, Windsurf, Cursor enabled coordination across tools

- MoE (Mixture of Experts) improved compute-efficiency

- Agentic stacks emerged for long-horizon memory + reflection

We're moving toward co-pilots that can actually help you build, debug, research, and create — not just chat.

🧠 2025 and Beyond: Agentic Protocols & Post-Transformer Futures

What's coming next?

🧩 Agentic Protocols:

Frameworks like MCP (Memory, Code, Plan) and A2A (Action-to-Action) aim to:

- Enable memory across tasks

- Plan long-term

- Reflect on actions

- Coordinate across tools and agents

🧪 Post-Transformer Models:

New architectures are being tested:

- Mamba (State Space Models) for long-range efficiency

- RWKV (RNN + Transformer hybrid) for lightweight inference

These early results are promising — hinting at an evolution beyond transformers.

🔁 A Story of Compounding Innovation

Each breakthrough built on the last.

None of this was random. It was:

- Research ➜ Infrastructure ➜ Language ➜ Products ➜ Agents

- CNNs ➜ Transformers ➜ LLMs ➜ Multimodal ➜ Agentic AI

We’re standing on the shoulders of years of engineering, openness, and belief in scale.

📥 Download the Visuals

💬 What do you think?

Which moment was the most pivotal?

Is the next wave already here — or still coming?

🔖 Tags

#AI #MachineLearning #DeepLearning #LLMs #Transformers #AgenticAI #GPT4 #AutoGPT #OpenSourceAI #Infrastructure #Mamba #RWKV #LangChain #RLHF #Timeline #AIHistory #FutureOfAI